Generative AI won't just be about improving financial industry processes and structures. The boom in such tools will be a key management challenge for risk control functions in the 2020s.

This time last year, the words «generative» and «natural language processing» didn’t mean all that much to many. But now that «The Zuck» announced his intention to end «all diseases» (collated Google search) with the help of artificial intelligence, things seem to have come full circle. We are at or near peak AI when it comes to the hype.

Outside of tech circles, it's still very early days, even if other forms of AI and dumb chatbots have been an integral part of the banking business for several years, and despite what the newfangled AI business heads, who now seem to be sprouting up everywhere, will tell you.

Getting Passive Aggressive

Much of what has been written on finews.com since Chat GPT landed square in the middle of the banking industry’s collective consciousness last year relates to how generative AI can be used to improve client interfaces or cut costs and jobs. Some pieces go even further, suggesting it will turn the industry’s business models upside down.

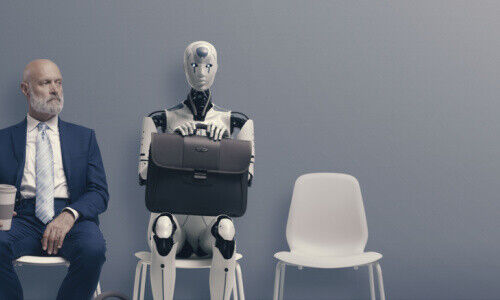

That's par for the course with every new piece of all-encompassing tech that comes along every decade or so, but this time there's a difference. Current tools have significant implications for risk management and control functions. There's already enough to make a case for a fully staffed AI risk function to sit alongside old-school credit and market risk teams and slightly newer school regulatory compliance and financial crime areas.

New Quirks

But why is that? An article in the August issue of «Scientific American» makes it abundantly clear. Citing the work of computer scientists, it concludes that Chat GPTs text-generating artificial intelligence appears to deteriorate significantly in the space of just a few months.

«Across two tests, including the prime number trials, the June GPT-4 answers were much less verbose than the March ones. Specifically, the June model became less inclined to explain itself. It also developed new quirks» the magazine reported.

Bad Advice

The mind boggles when such development is extrapolated onto a new digital wealth management client advisor tool or something similar. You don’t need a fanciful imagination to see how something like that could go viral in the high net-worth social media influencer space and quickly end up on the junk heap of banking history fiascos.

Although the final word hasn't been uttered on what prompted this deterioration, financial institutions need to manage potential «model drift,» akin to the scrutiny of AI-based algorithms that UBS indicated it had undertaken in June, something finews.com also previously commented on.

Wrong Direction

But trends seem to be pointing in precisely the opposite direction. An AI governance report (registration required) by global law firm DLA Piper said that 96 percent of companies were rolling out some form of AI, while slightly more than half were ignoring their own legal and compliance teams when doing so.

That seems distinctly unwise when governments worldwide are looking at ideas for regulating AI, including the need for independent oversight. Something or someone is needed to manage those kinds of developments closely, and it's probably better that the team is human. At least for now.